Multidisciplinary Design Analysis and Optimization … MDAO

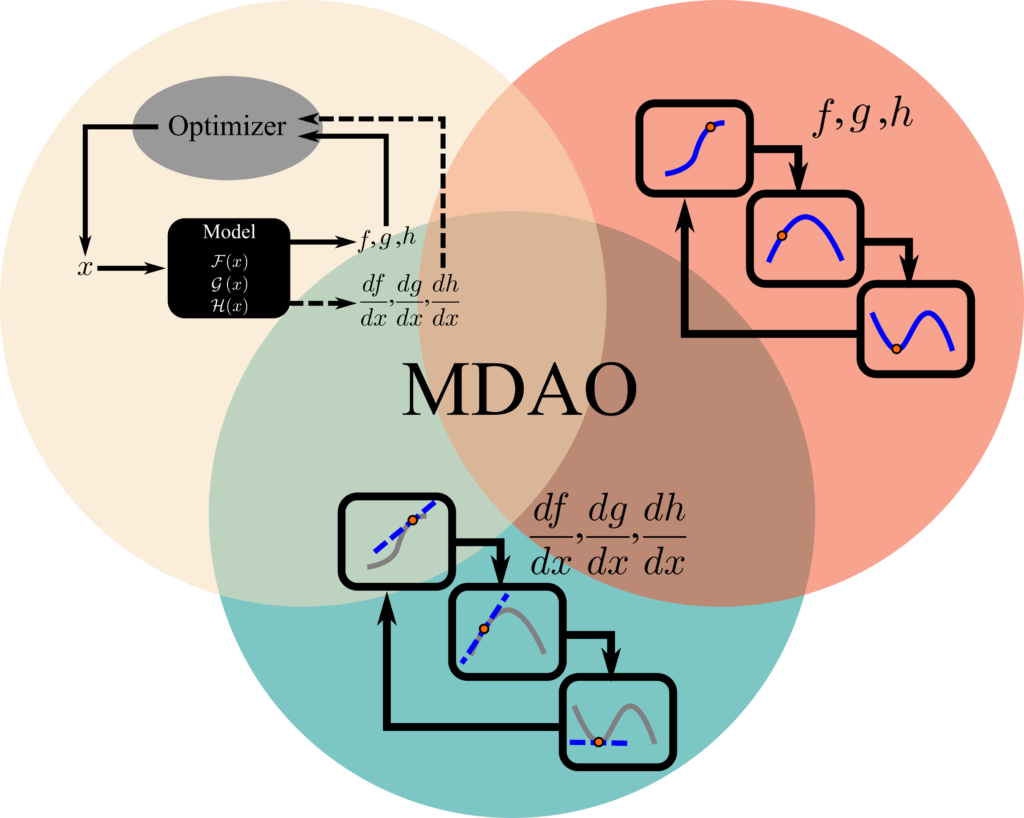

Practitioners of MDAO must develop skills in three separate, but closely related, areas: nonlinear optimization, model construction, and model differentiation. An undergraduate STEM education will likely have given given you a brief introduction to all three, and this is enough to get most people started with MDAO.

As your models grow more complex you start to hit walls because your model won’t converge, the optimizer can’t find a “good” answer, the compute cost grows to high, etc. We’ve built OpenMDAO to help alleviate many of these common problems, but it has become a bit of an arms race between the development team and the users. As we add features to solve existing common challenges, our users tend to add model complexity till they run into new ones. I don’t (yet) have a solution to end the arms race but I want to draw a clearer picture of the battle field.

So let’s take a closer look at the three areas I have deemed the keys to success in MDAO. I obviously can’t cover everything, but even just understanding the classification can help you narrow down your problem area and and enable a more focused search for solutions.

Nonlinear Optimization

Do you know what “optimization” is? Some have joked that it’s an automatic process to find all the bugs, holes, and weaknesses in your model… if you found yourself wincing at that jab, it’s because you know there is more than a little truth to that 🙁

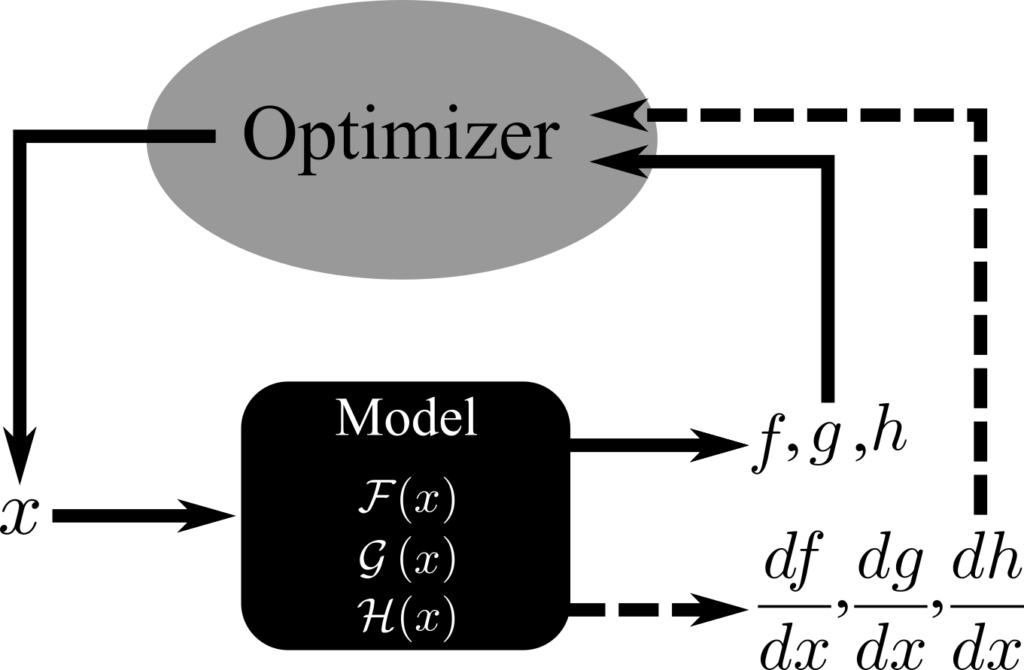

A more mathematical perspective on “optimization” is as a means of converting a underdefined problem with an infinite number of solutions (more degrees of freedom than equations to constrain them) into a well defined one with a single solution (equal number of degrees of freedom and equations). This conversion is done by using the objective function (usually represented by “f”) to say which solution you prefer. Sometimes there are also constraints (represented by “g” and “h”) as well, which help to further constrain the solution space.

There are lots of different kinds of optimizers, some that use derivatives and some that don’t. Optimizers see your model as a black box that maps design variables to objective and constraint values (and sometimes the associated derivatives). The optimizer doesn’t care how you accomplish that mapping, as long as you give it the data it needs.

A lot of optimization challenges occur because you have picked a bad objective function, a bad constraint, or had too many constraints. I find it useful to think of the black-box picture when trying to diagnose these kind of problem-formulation issues. You can ignore all of the internal workings of your model; assume/pretend/wish/fantasize) that it will work perfectly for any given inputs. Then think about how you expect the outputs to react to the inputs, and look for holes there.

OpenMDAO, despite the “O” in the name, doesn’t actually address the optimization area that much. We have a driver interface that provides access to a few of optimizers. Our interface is a useful abstraction that makes it easier to swap between optimizers, but it does not provide the optimization algorithms itself. If you already have a code that provides function evaluations (and derivatives) of everything you care about, then you might actually be better off interfacing directly with some of the optimizer libraries themselves.

Model Construction

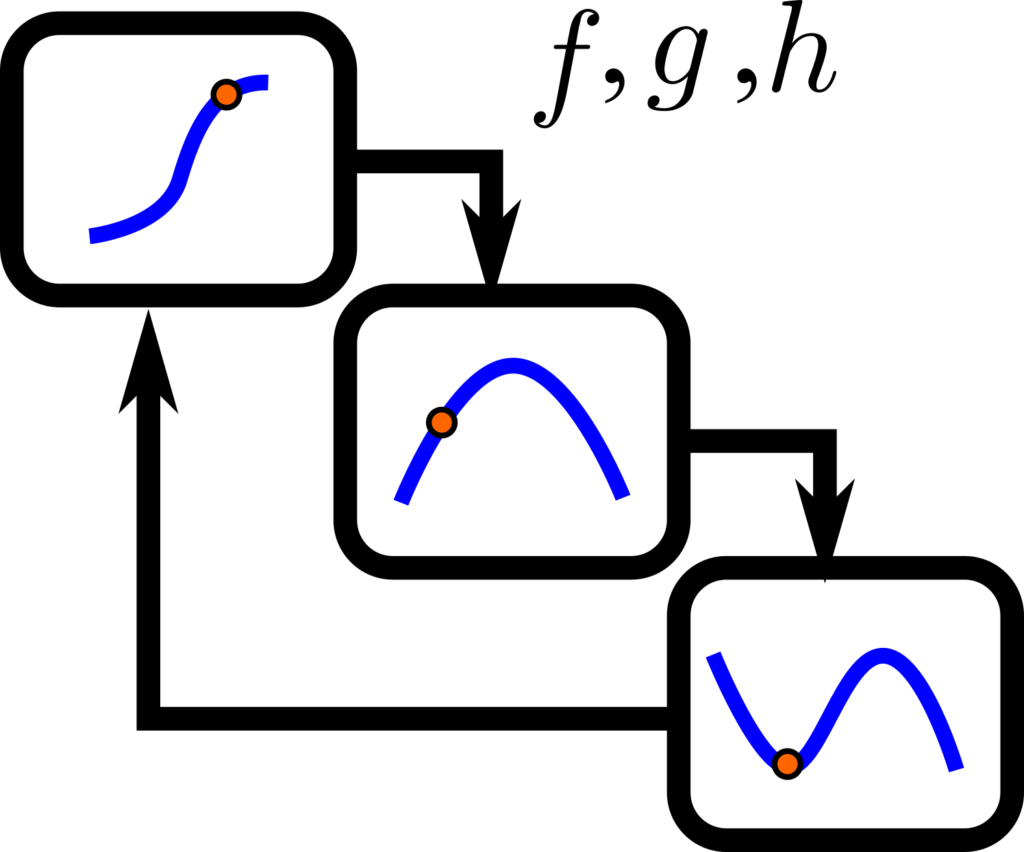

The black box perspective says f=F(x). Model construction is everything that has to go into making those six characters actually compute f, for any reasonable value of x. There are two fundamental things you have to deal with when doing model construction: data passing and convergence.

Data passing is usually fairly strait forward. If you’re writing code, it can be as simple as passing the return values of one function as the arguments to the next. Some models use file i/o to pass complex data formats around. Generally speaking data passing is something you probably don’t think about a lot, but depending on what you’re doing it can be pretty important. Thats especially true in any kind of parallel memory situation, or if you have to work on geographically distributed computing and across firewalls. OpenMDAO uses a pretty fancy scheme based on the MAUD architecture that works well both in serial and distributed situations.

With regard to convergence, there is a whole spectrum from trivial to insanely hard challenges that you may encounter. Some models are composed of explicit functions arranged in a purely feed forward sequence. If your model looks like this, I’m jealous! Most of the time though, you end up with some implicit functions in the model. Maybe one of the boxes is implicit (i.e. there is a nonlinear solver inside it), or you have a cycle in the data flow between boxes that requires a top level solver. Often you have both implicit boxes and cyclic data flows… thats when things really start to get interesting. Making models sufficiently stable, so that for any value of “x” you can converge on a value of “f” is critically important. I promise you that if there is some area where your solvers don’t converge, an optimizer is going to find it!

Often times you have very complex graphs of data-flow with lots of different cycles (and cycles within cycles). These problems are where OpenMDAO’s unique hierarchical model structure starts to provide real advantages.

I used the term “nonlinear solver” which you may or may not be familiar with. I am quite certain you’ve used one before. Perhaps you’ve written a while loop to iterate till some error term gets below a tolerance? Thats called a fixed-point solver, or alternatively a nonlinear Gauss-Seidel solver. There is the classic Newton’s method, and a bunch of variations that are very similar — scipy has a nice collection to check out. Of course, OpenMDAO has a good selection too.

Model Differentiation

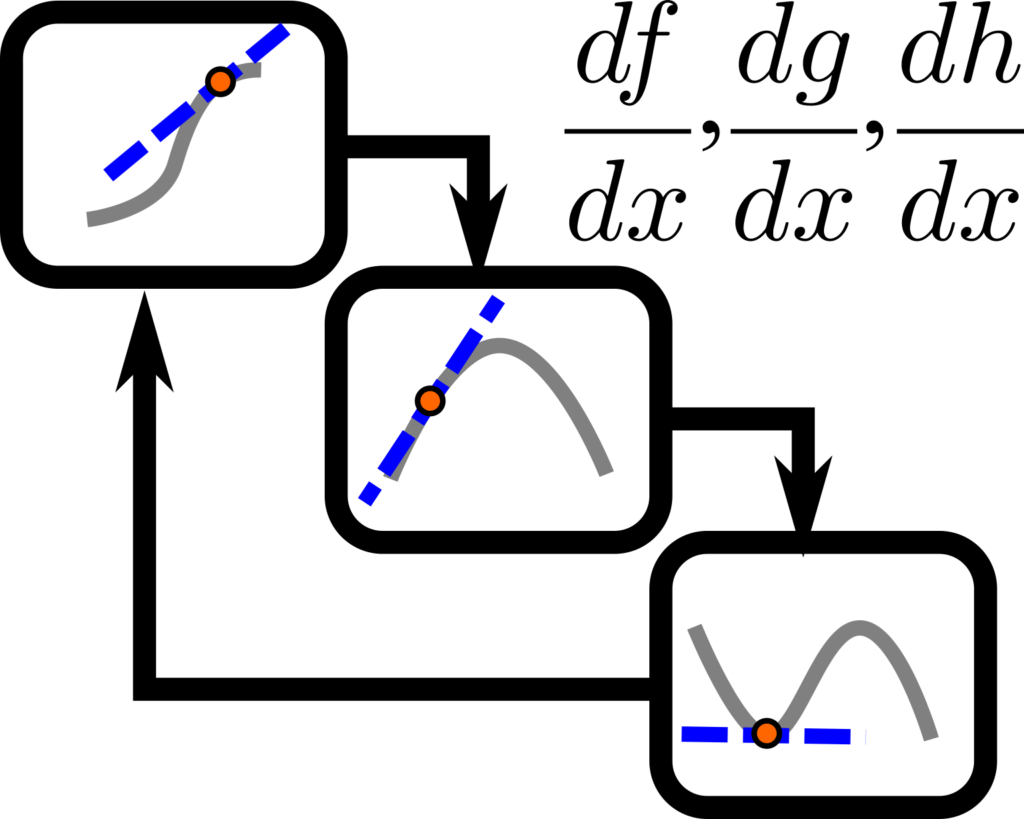

Not every MDAO application demands derivatives, but they are usually needed whenever you have expensive function evaluations, large numbers of design variables, or require very tight convergence. Many MDAO practitioners avoid this topic area. It’s understandable, since it’s both challenging and potentially time consuming. But derivatives offer many orders of magnitude computational speedup and greatly improved numerical stability, so they are well worth considering!

This picture looks a lot like he one for model construction. That is intentional. One way to think of this whole process is that you could replace every single box in the model with its local linearized representation then finite-difference over the whole model to compute the total derivatives. Thats not a particularly efficient or simple implementation, but its a nice conceptual context to understand whats needed.

There are lots of ways to compute derivatives. There are numerical approaches such as finite-difference and complex-step methods. You likely learned about manual differentiation techniques based on the chain-rule. You may have also used symbolic differentiation (e.g. sympy) when you found it tedious to manually work through all the equations. Some modern scientific computing languages have an advanced form of symbolic differentiation called algorithmic differentiation which works on computer code directly (rather than having to type it in to the symbolic engine and convert back to code). These computational tools have their roots in the 1960’s when particle physicists were struggling with massive volumes of equations. The 1999 Nobel Prize in physics went to two scientists who pioneered the computer algebra techniques for their work!

All of these techniques work fairly well when you you have feed-forward models of explicit functions. Whenever you have implicit functions, or any kind of cycles in your data passing then the chain rule (and the blunt application of symbolic or algorithmic differentiation) start to break down. More complex techniques like adjoint derivatives, direct derivatives, or the unified derivative equations become valuable. These topics are some of the least widely known amongst MDAO practitioners, and they are also where OpenMDAO offers the most advanced and unique features. OpenMDAO lets you take advantage of the unified derivative equations without actually having to understand them at all!